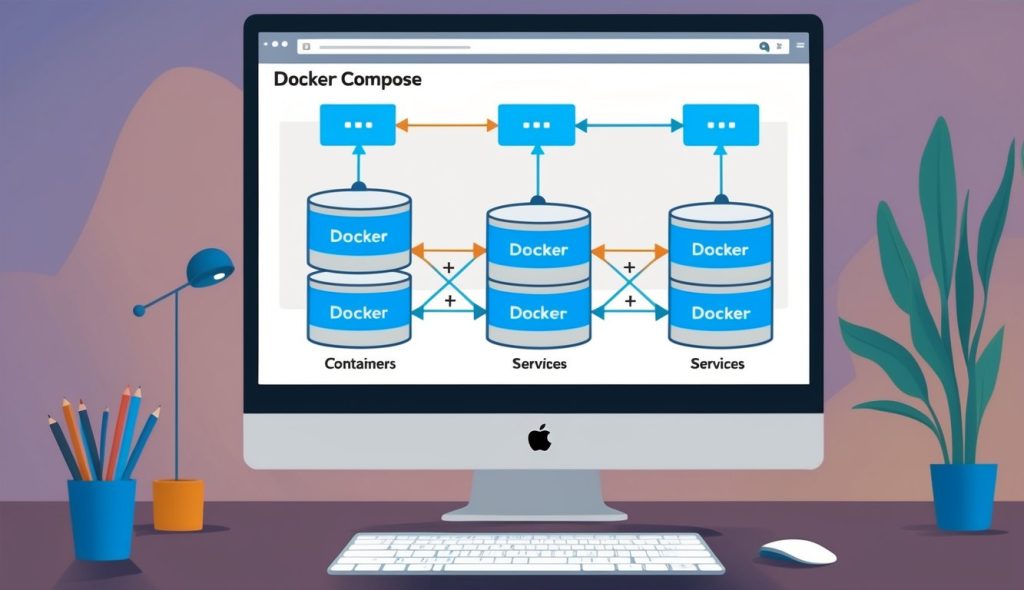

Ever wondered how to run multiple containers without typing lengthy commands? Docker Compose simplifies this process by allowing you to define your application’s services, networks, and volumes in a single YAML file.

Docker Compose helps developers create reproducible environments that work consistently across different machines. This makes it an essential tool for anyone working with containerized applications.

Setting up a multi-container application manually can be time-consuming and error-prone. With Docker Compose, you can define and run multi-container applications through a configuration file instead of typing extremely long Docker commands.

This approach streamlines development workflows and makes complex setups more manageable for teams working on various projects.

Docker Compose works especially well for development, testing, and staging environments where consistency is crucial. Developers can specify their application’s dependencies, services, and configuration in one place, ensuring that everyone on the team has the same setup.

This automation of managing several containers reduces potential issues that might arise from different environments.

Key Takeaways

- Docker Compose simplifies multi-container management through a single YAML configuration file, eliminating the need for complex Docker commands.

- Beginners can quickly create reproducible development environments that maintain consistency across different machines and team members.

- Docker Compose automates the orchestration of containers, networks, and volumes, making it easier to develop complex applications with multiple interconnected services.

Understanding Docker and Containerization

Docker technology transforms application deployment by packaging software in lightweight, isolated units that run consistently across different environments. These containers remove the “it works on my machine” problem that often plagues development teams.

What Is Docker?

Docker is an open-source platform that automates the deployment, scaling, and management of applications inside containers. It was released in 2013 and quickly became the industry standard for containerization technology.

Docker makes it easy to create, deploy, and run applications by using containers. These containers bundle an application with all its dependencies, libraries, and configuration files needed to run across any environment.

The main components of Docker include:

- Docker Engine: The core system that creates and runs containers

- Docker CLI: Command-line interface for interacting with Docker

- Docker Desktop: An easy-to-install application for Mac and Windows

Docker simplifies the development lifecycle by allowing developers to work in standardized environments. This means fewer bugs and more efficient workflows.

Docker Containers vs. Virtual Machines

Docker containers and virtual machines (VMs) both isolate applications, but they work quite differently. Containers share the host system’s kernel while VMs run on hypervisors with complete operating systems.

Key differences:

| Feature | Docker Containers | Virtual Machines |

|---|---|---|

| Size | Lightweight (MBs) | Heavy (GBs) |

| Startup time | Seconds | Minutes |

| Resource usage | Low overhead | Higher overhead |

| Isolation level | Process isolation | Complete isolation |

| OS requirements | Share host OS kernel | Need full OS |

Containers start much faster and use fewer resources than VMs. This efficiency makes Docker containers ideal for microservices architecture and cloud-native applications.

Linux containers form the foundation of Docker’s technology. They provide the isolation and security needed while maintaining performance.

Core Concepts of Docker: Images and Containers

Docker images are the blueprints for containers. They are read-only templates containing an application along with its dependencies and configurations. Images are built in layers, making them efficient to store and transfer.

Images are created from a Dockerfile – a text file with instructions for building the image. Each instruction creates a layer in the image, allowing for caching during the build process.

Docker containers are runnable instances of images. When you launch a container, Docker creates a writable layer on top of the image layers where the application can run.

Key operations with Docker images include:

- Pulling images from Docker Hub or other registries

- Building custom images with

docker build - Pushing images to registries with

docker push - Managing local images with

docker imagecommands

Multiple containers can run from the same image, each with its own isolated environment.

The Docker Ecosystem

The Docker ecosystem extends beyond the core containerization technology to include a rich set of tools and services.

Docker Hub is a cloud-based registry service where users can find and share Docker images. It hosts thousands of public images from software vendors, open-source projects, and the community.

Docker Compose allows defining multi-container applications in a single YAML file. It simplifies the management of interconnected containers by handling networking and volume mounting.

Other important ecosystem components include:

- Docker Swarm: Native clustering for Docker

- Docker Registry: Private storage for Docker images

- Docker Trusted Registry: Secure, enterprise-grade image storage

Third-party tools like Kubernetes, Portainer, and Rancher complement Docker by providing advanced orchestration and management capabilities.

The ecosystem continues to grow as more organizations adopt containerization for development, testing, and production environments.