Getting Started with Docker Compose

Docker Compose simplifies container management by allowing you to run multi-container applications with a single command. It uses a YAML configuration file to define services, networks, and volumes required for your application.

Installing Docker and Docker Compose

Before using Docker Compose, you need to install both Docker and Docker Compose on your system. The installation process varies depending on your operating system.

For Windows and Mac, download and install Docker Desktop, which includes both Docker and Docker Compose. This provides a user-friendly interface for managing containers.

For Linux systems, install Docker Engine first using the package manager for your distribution:

sudo apt update

sudo apt install docker.io

Then install Docker Compose separately:

sudo curl -L "https://github.com/docker/compose/releases/download/v2.23.0/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

Verify the installation by checking both versions:

docker --version

docker-compose --version

Basic Docker Commands

Understanding basic Docker CLI commands helps when working with Docker Compose. These commands manage containers, images, and other Docker resources.

To pull an image from Docker Hub:

docker pull image-name

To list running containers:

docker ps

To list all containers (including stopped ones):

docker ps -a

To run a container from an image:

docker run image-name

To stop a running container:

docker stop container-id

To remove a container:

docker rm container-id

These Docker commands form the foundation for understanding how containers work before using Docker Compose for more complex setups.

Your First Docker Compose File

The heart of Docker Compose is the configuration file named compose.yaml (or docker-compose.yml for older versions). This file defines all services, networks, and volumes for your application.

Create a file named compose.yaml in your project directory:

version: '3'

services:

web:

image: nginx:latest

ports:

- "8080:80"

database:

image: mysql:5.7

environment:

MYSQL_ROOT_PASSWORD: example

MYSQL_DATABASE: myapp

This example defines two services: a web server using Nginx and a MySQL database. The web server maps port 8080 on your host to port 80 in the container.

To start these services, run:

docker-compose up

To run in detached mode (background):

docker-compose up -d

To stop all services:

docker-compose down

Docker Compose for beginners makes deploying complex applications much simpler than using individual Docker commands for each container.

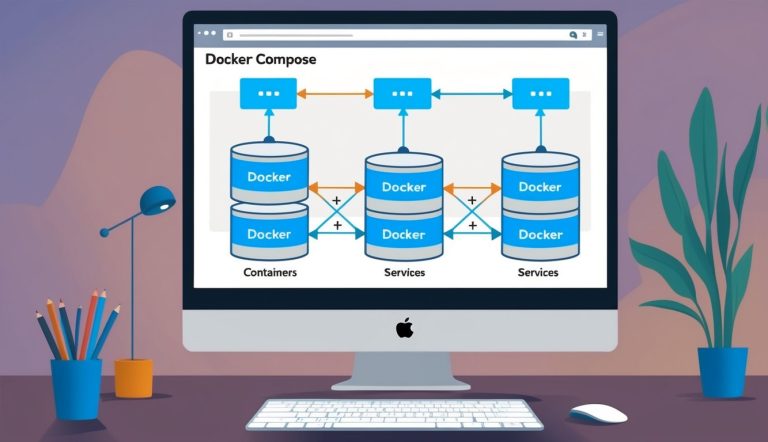

Docker Compose Core Features

Docker Compose offers powerful capabilities that simplify managing multi-container applications. It provides a structured way to define, build and orchestrate containers through a declarative YAML file approach.

Services, Networks, and Volumes

Services in Docker Compose represent your application’s containers. Each service is defined in the docker-compose.yml file with its own configuration. You can specify which image to use or build from a Dockerfile.

Networks enable communication between your containers. By default, Compose creates a single network for your application, but you can define custom networks for more complex setups.

services:

web:

build: ./web

networks:

- frontend

db:

image: postgres

networks:

- backend

networks:

frontend:

backend:

Volumes provide persistent storage for your containers. They allow data to persist between container restarts and can be shared between services. This is crucial for databases and applications that need to maintain state.

Docker Compose manages these components together, making it easy to define relationships with depends_on to control startup order.