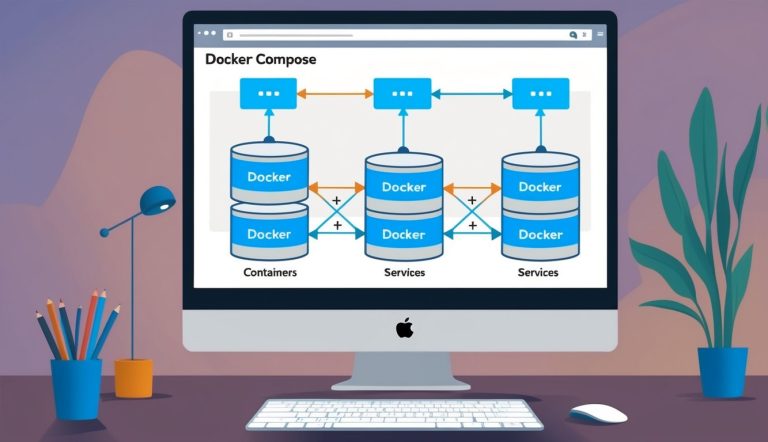

Building and Running Containers with Compose

Docker Compose streamlines container management with simple commands. The docker compose up command builds, creates, and starts all services defined in your configuration file.

For development workflows, the --build flag ensures images are rebuilt when code changes:

docker compose up --build

Compose handles running containers in the correct order based on dependencies. The depends_on option ensures dependent services start first:

services:

web:

depends_on:

- db

db:

image: postgres

You can run specific services or run in detached mode with:

docker compose up -d web

Scaling services is straightforward with the --scale option, allowing multiple instances of a service to run simultaneously.

Managing Containerized Applications

Docker Compose excels at lifecycle management of containerized applications. The docker compose down command stops and removes containers, networks, and volumes.

Environment variables can be passed to containers through the environment section or using .env files, making configuration flexible across different environments.

services:

web:

environment:

- DEBUG=True

- DATABASE_URL=postgres://postgres:postgres@db:5432/app

Health checks ensure services are properly running. Docker Compose monitors container status and offers various network modes for different isolation requirements.

Version control of your Docker Compose files enables infrastructure as code practices. Changes to your application architecture can be tracked and reviewed alongside application code.

Best Practices for Dockerfiles and Images

Creating efficient Docker images requires careful planning and adherence to best practices. These techniques will help you build smaller, more secure, and easier-to-maintain containerized applications.

Writing Maintainable Dockerfiles

A well-structured Dockerfile serves as the blueprint for your container. Start by selecting a minimal base image that meets your requirements. Alpine Linux is popular for its small size, while distroless images provide even fewer unnecessary components.

Use clear, descriptive comments to explain complex sections. Group related commands together to improve readability. For example:

# Install dependencies

RUN apt-get update && \

apt-get install -y python3 python3-pip && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

Organize instructions from least to most frequently changed. This approach maximizes cache efficiency during builds.

Multi-stage builds help create leaner images by separating build-time dependencies from runtime needs. This technique can dramatically reduce final image size by excluding compilers and build tools.

Efficient Docker Builds and Caching

Docker’s build cache is powerful but requires strategic planning. Each instruction in a Dockerfile creates a layer, and Docker reuses unchanged layers from previous builds.

To leverage caching effectively:

- Place commands that change infrequently early in the Dockerfile.

- Split the build process to copy only necessary files when building.

- Use

.dockerignoreto exclude irrelevant files from the build context.

For application dependencies, copy just the dependency files first:

COPY package.json yarn.lock ./

RUN yarn install

COPY . .

This pattern ensures dependencies aren’t reinstalled unless actual dependency files change. The Docker build cache works most efficiently when changes are isolated to specific layers.

Managing Docker Images

Proper image management prevents wasted storage and security vulnerabilities. Tag images meaningfully with version numbers or Git commit hashes rather than just using “latest.”

Regularly prune unused images with:

docker image prune -a

Implement a vulnerability scanning solution to identify security issues in your Docker images. Many CI/CD pipelines can integrate scanning during the build process.

Keep images small by:

- Removing development dependencies

- Clearing package manager caches

- Using the

--no-install-recommendsflag with apt-get - Combining RUN commands with

&&to reduce layer count

Consider using an image registry to store and distribute your images across environments. This provides version control and simplifies deployment workflows.