Deployment and Operations

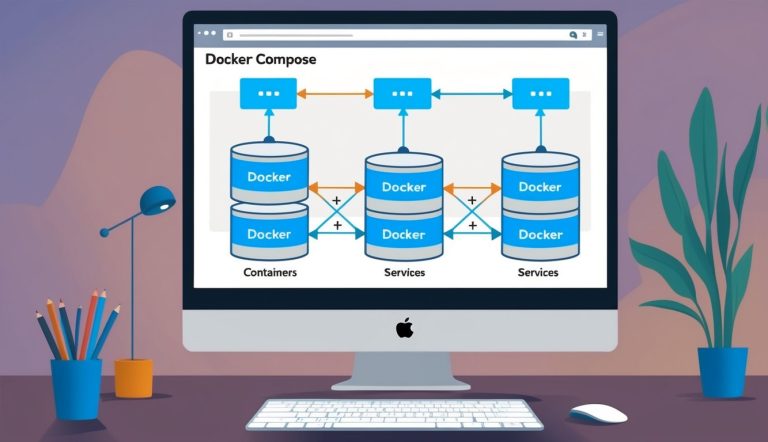

Moving Docker Compose applications from your local environment to production requires specific strategies and tools. Proper deployment practices ensure your containerized applications run reliably in various environments.

From Development to Deployment

When taking your Docker Compose setup to production, you’ll need to adjust your configuration files. Create separate compose files for different environments using the docker-compose.override.yml pattern for development-specific settings.

For production deployment, use the docker-compose.prod.yml file with optimized settings like:

- Removed volume bindings for code

- Production-ready environment variables

- Proper restart policies (

restart: always) - Resource constraints for containers

Check your running containers in production with docker ps to verify everything is working as expected.

Consider using Docker Swarm or Kubernetes for more complex deployments. These orchestration tools provide better scaling and failover capabilities than basic Docker Compose deployments.

Continuous Integration and Continuous Deployment Practices

Implementing CI/CD pipelines automates testing and deployment of Docker Compose applications. Popular tools like Jenkins, GitLab CI, and GitHub Actions can streamline multi-container app deployment.

A basic CI/CD workflow includes:

- Building images on code changes

- Running automated tests in containers

- Pushing images to a registry (Docker Hub, AWS ECR)

- Deploying updated compose stack to servers

Set up environment-specific variables in your CI system rather than hardcoding them in compose files. This approach enhances security and flexibility.

Docker Compose works well with continuous integration systems because it defines the entire application stack in code. This makes it easier to test complete environments before deploying to production.

Security and Isolation in Docker

Docker provides robust security features that protect your containerized applications from various threats. The platform uses multiple isolation techniques to keep containers separate from each other and the host system.

Ensuring Container Security

Docker containers need proper security measures to protect your applications and data. Always use the latest Docker version to benefit from security patches and improvements.

Docker’s built-in network isolation helps separate containers that don’t need to communicate. This limits potential attack paths through container networks.

Run containers with the least privileges possible. Use non-root users inside containers to reduce risks if a container is compromised.

Scan your container images for vulnerabilities before deployment. Tools like Docker Security Scanning can identify known security issues in your images.

Keep your base images minimal. Fewer components mean fewer potential security vulnerabilities. Alpine-based images are popular for their small size and reduced attack surface.

Namespaces and Isolation Features

Namespaces provide the first and most straightforward form of isolation in Docker. They create separate environments for processes running in containers, preventing them from seeing or affecting processes outside their namespace.

Docker implements several namespace types:

- PID namespace: Isolates process IDs

- Network namespace: Provides separate network interfaces

- Mount namespace: Gives containers their own file system view

- UTS namespace: Allows containers to have their own hostname

These isolation features ensure that even if one container is compromised, others remain secure. The operating system kernel enforces these boundaries between containers.

Resource limits through control groups (cgroups) prevent containers from consuming excessive CPU, memory, or disk I/O. This protects against both accidental resource exhaustion and denial-of-service attacks.