Advanced Docker Compose Topics

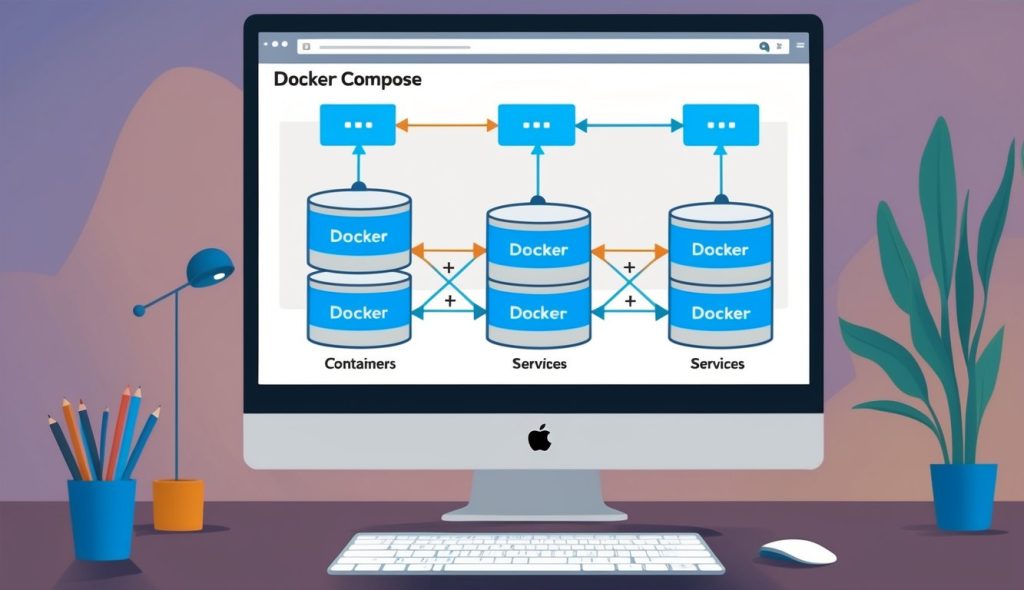

Once you master Docker Compose basics, several advanced capabilities allow you to build more complex, resilient applications. These features help with orchestration, scaling, and managing distributed systems across multiple hosts.

Docker Swarm and Kubernetes

Docker Swarm and Kubernetes are container orchestration platforms that extend Docker Compose functionality across multiple machines. Docker Swarm is built into the Docker Engine, making it a simpler option for beginners looking to scale beyond a single host.

With Docker Swarm, you can turn a group of Docker hosts into a single virtual host. The command docker swarm init transforms your Docker installation into a swarm manager. Docker Compose files can be deployed directly to a swarm using docker stack deploy.

Kubernetes offers more robust features for large-scale deployments. It provides advanced container orchestration with automated rollouts, rollbacks, and self-healing capabilities.

For Kubernetes, you can convert Docker Compose files to Kubernetes manifests using tools like Kompose. This allows teams to transition from development to production environments smoothly.

Scaling and Load Balancing Containers

Docker Compose makes scaling services straightforward with the --scale flag. For example, running docker-compose up --scale web=3 launches three instances of a web service defined in your compose file.

Load balancing happens automatically when containers are scaled. Docker distributes incoming requests across all available instances of a service. This improves application availability and performance under heavy loads.

For more control over traffic distribution, consider adding a dedicated load balancer like Nginx or Traefik to your Compose file:

services:

loadbalancer:

image: nginx

ports:

- "80:80"

depends_on:

- web

Health checks can also be configured to ensure traffic is only sent to healthy containers:

services:

web:

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost"]

interval: 30s

timeout: 10s

retries: 3

Understanding the Underlying Systems

Docker Compose operates on different platforms, requiring specific system knowledge for optimal implementation. Docker’s containerization technology relies heavily on the host operating system’s capabilities and features.

Linux Fundamentals for Docker Users

Linux forms the foundation of Docker technology, providing essential components that make containerization possible. Docker containers use the Linux kernel’s namespaces and cgroups features to create isolated environments.

Namespaces isolate containers by limiting what processes can see, while cgroups control resource allocation like CPU and memory. These features are native to Linux, making it the most natural environment for Docker.

Key Linux distributions like Debian and Ubuntu are popular choices for Docker hosts due to their stability and wide community support. Docker’s daemon runs natively on these systems without additional virtualization layers.

Understanding basic Linux commands is valuable for Docker users. Commands for file manipulation, process management, and networking help troubleshoot container issues effectively.

Docker on Different Operating Systems

Docker works across multiple operating systems but functions differently on each platform. On Linux, Docker runs natively using the host kernel. On Windows and macOS, Docker requires additional components.

For Windows users, Docker Desktop installs the necessary tools to run containers. Windows offers two container types: Windows containers (using Windows kernel) and Linux containers (using WSL2 – Windows Subsystem for Linux).

Windows containers are useful for .NET applications and other Windows-specific workloads. They provide compatibility with Windows-only dependencies and services.

Mac users rely on a lightweight virtual machine that hosts a Linux environment for running Docker. This creates a small performance overhead compared to native Linux implementations.

Docker Compose workflows remain consistent across different operating systems, with minimal command differences. This cross-platform compatibility makes Docker Compose an excellent tool for development teams using various systems.

Ecosystem and Community

Docker Compose exists within a vibrant ecosystem of tools, resources, and people who continuously improve container technology. This community provides valuable support for both beginners and experienced developers working with Docker and Docker Compose.

Getting Involved with the Docker Community

The Docker community welcomes developers of all skill levels through multiple channels. The Docker Community Forums serve as a central hub where users can ask questions and share knowledge about Docker Compose.

Docker Meetups happen worldwide, offering in-person networking and learning opportunities. These gatherings help developers connect with others using similar technologies in their region.

GitHub provides another avenue for community engagement. Developers can contribute to Docker projects by reporting bugs, suggesting features, or submitting code changes. This collaborative approach has strengthened the Docker ecosystem significantly.

Slack channels and Discord servers dedicated to Docker offer real-time help. Experienced users often assist newcomers with Docker client and daemon issues, creating a supportive learning environment.

Resources and Tools for Docker Developers

Developers working with Docker Compose have access to numerous resources that enhance productivity. The official Docker documentation provides comprehensive guides and reference materials for all aspects of Docker, including Docker Compose.

Several third-party tools complement the Docker ecosystem. Tools like Portainer offer graphical interfaces to manage containers, while Kompose helps convert Docker Compose files to Kubernetes resources.

Online learning platforms feature dedicated Docker courses ranging from beginner to advanced levels. These structured learning paths help developers master Docker concepts systematically.

IDE plugins for Visual Studio Code, IntelliJ, and other popular editors provide syntax highlighting and validation for Docker Compose YAML files. These integrations streamline the development workflow considerably.

Open-source example projects demonstrate best practices for Docker Compose configurations across different technology stacks, offering practical implementation guidance.

Portability and Development Workflow

Docker Compose creates consistent environments that work across different machines and enhances developer productivity through automation. These capabilities solve common development challenges and streamline workflows for teams of all sizes.

Creating Portable Docker Environments

Docker Compose makes applications truly portable by defining all dependencies in a single YAML file. This means the same application runs identically on a developer’s local machine, a testing server, or production environment.

The docker-compose.yml file acts as a blueprint that describes: Services and their configurations, Networks, Volumes, and Environment variables.

This approach eliminates the infamous “it works on my machine” problem. When developers share a Compose file, they share the exact environment specifications rather than lengthy setup instructions.

Docker Compose works with any hypervisor that supports Docker, whether it’s VirtualBox, Hyper-V, or native virtualization. This ensures consistency across operating systems including Windows, macOS, and Linux.

Teams can version control their Compose files alongside application code, creating a complete snapshot of the development environment that travels with the codebase.

Enhancing Development Productivity

Docker Compose dramatically improves development workflow efficiency through automation and standardization. With a single command – docker-compose up – developers can launch an entire application stack.

This automation removes repetitive setup tasks that typically consume valuable development time: No manual container creation, No complex networking configuration, and No dependency installation conflicts.

Changes to application code are immediately visible without rebuilding containers when using volume mounting, making the edit-test cycle much faster.

Docker Compose enables developers to simulate complex infrastructure locally. They can work with multi-container applications including databases, caching services, and message queues as a unified system.

Teams benefit from standardized environments that ensure all members work under identical conditions, reducing debugging time and increasing collaboration efficiency.

Frequently Asked Questions

Docker Compose can be challenging to understand at first. These common questions address the most important aspects of working with Docker Compose, from basic concepts to practical implementation details.

What is Docker Compose and how is it used in development?

Docker Compose is a tool that helps developers define and run multi-container Docker applications. It uses YAML files to configure application services and simplifies the deployment process.

In development environments, Docker Compose allows teams to create consistent, isolated workspaces. Developers can focus on configuring an environment that matches production while avoiding the “it works on my machine” problem.

Docker Compose also makes it easy to link multiple services together, such as databases, caching systems, and web servers, with just a few commands.

How do you create a Docker Compose file to set up a multi-container application?

A Docker Compose file is written in YAML format with the filename docker-compose.yml. The file defines services, networks, and volumes for a multi-container setup.

Each service represents a container and includes configuration options like image, ports, environment variables, and volumes. Creating multi-container applications becomes straightforward with this approach.

Here’s a simple example:

version: '3'

services:

web:

build: ./web

ports:

- "8000:8000"

database:

image: postgres

environment:

POSTGRES_PASSWORD: example

What steps are involved in running a service-defined stack with Docker Compose?

Running a service stack with Docker Compose involves several straightforward steps. First, navigate to the directory containing your docker-compose.yml file in the terminal.

Next, run docker-compose up to start all services defined in the file. Add the -d flag (docker-compose up -d) to run containers in detached mode (background).

To stop the services, use docker-compose down. This command stops and removes containers, networks, and volumes defined in the compose file. The Docker Compose Quickstart guide provides excellent examples of these commands in action.

How do you specify and manage persistent data using volumes in Docker Compose?

Volumes in Docker Compose provide persistent data storage that exists beyond the lifecycle of containers. To specify a volume, add a volumes section to your Docker Compose file.

There are two main types of volumes: named volumes and bind mounts. Named volumes are managed by Docker, while bind mounts map a container path to a host path.

services:

database:

image: postgres

volumes:

- postgres_data:/var/lib/postgresql/data # named volume

- ./backup:/backup # bind mount

volumes:

postgres_data: # define the named volume

This setup ensures data persists even when containers are destroyed and recreated.

In what situations would you need to use the ‘docker-compose build’ command?

The docker-compose build command is used when services in the Docker Compose file need to be built from source rather than pulled from a registry. This is common during active development.

Developers should use this command after making changes to a Dockerfile or when source code that’s part of the build context changes. The build command recreates images with the latest code.

It’s also helpful when working with development environments where images need frequent updates to reflect ongoing code changes.

How to update a running service using Docker Compose without downtime?

Updating a running service with minimal downtime requires careful orchestration.

One way to do this is to use the docker-compose up --no-deps --build -d command with the service name.

This command rebuilds and restarts only the specified service while leaving other services running. The --no-deps flag prevents dependent services from restarting unnecessarily.

For production environments, you can implement a blue-green deployment strategy. This involves creating a new instance of the service with updated code, then switching traffic once it’s ready.